The AI industry is on the verge of a standards war, as tech giants Microsoft, Intel, Qualcomm, and Apple prepare to release devices that prioritize on-device AI capabilities. Microsoft and Intel have defined their vision for an “AI PC,” but Nvidia, the current AI leader, has alternative plans, which may lead to competing standards and market fragmentation.

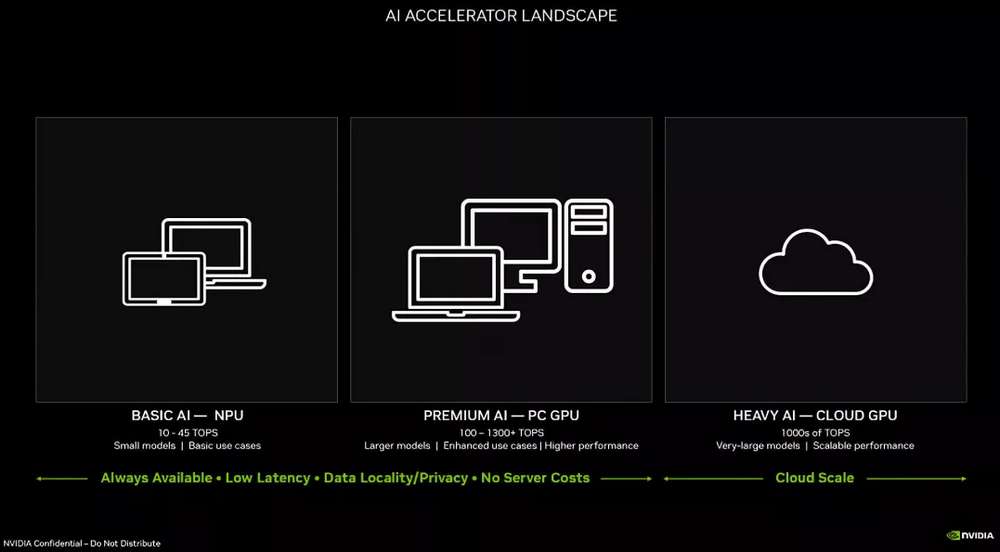

Nvidia favors discrete GPUs over Neural Processing Units (NPUs) for local generative AI applications, according to a leaked presentation. This preference may be driven by Nvidia’s dominance in large language model processing, which has significantly boosted its earnings. Meanwhile, Intel has been promoting its Meteor Lake CPUs with embedded NPUs as “AI PCs” for cloud-independent generative AI operations. Microsoft, Qualcomm, and Apple are set to follow with their own AI-enabled devices, further expanding the AI PC market.

Microsoft is attempting to position its services as essential to the emerging AI PC market by mandating its Copilot virtual assistant and a new Copilot key for all AI PCs. However, Nvidia disagrees, arguing that its RTX graphics cards, available since 2018, are better suited for AI tasks, making Neural Processing Units (NPUs) unnecessary. Nvidia implies that millions of devices already qualify as “AI PCs.”

Nvidia challenges Microsoft’s claim that AI PCs require 40 trillion operations per second (TOPS) for next-generation performance. Nvidia’s RTX GPUs can reportedly achieve 100-1,300 TOPS, making them suitable for demanding applications like content creation, productivity, and large language model processing. The company highlights the capabilities of its RTX 30 and 40 series graphics cards, which can outperform Apple’s M3 processor and deliver “flagship performance” in certain tasks, according to Nvidia’s presentation.

Nvidia has released a significant update for ChatRTX, a chatbot that leverages the company’s TensorRT-LLM technology to operate locally on PCs equipped with RTX 30- or 40-series GPUs and at least 8 GB VRAM. What sets ChatRTX apart is its ability to answer queries in multiple languages by scanning through user-provided documents or YouTube playlists, supporting various formats like text, PDF, DOC, DOCX, and XML.

Nvidia’s claims of superiority in onboard AI performance are unlikely to deter competitors, and the NPU market is expected to become even more competitive as companies strive to surpass Nvidia’s capabilities. This increased competition will drive innovation and R&D, ultimately benefiting the industry and consumers alike. As the AI landscape continues to evolve, it will be interesting to see how different companies approach and improve upon Nvidia’s technology.

Maybe you liked other interesting articles?